Is it the Best of Times or the Worst of Times?

Silicon Valley is dead… true or false?

We’ve seen this storyline before, indeed, there is a long history of pundits issuing premature epitaphs lamenting “the end” of the most innovative tech center of the world.

Some say, “this time is different,” pointing to the big layoffs at META, Amazon, and other tech companies, plus the unexpected bank failure at Silicon Valley Bank (SVB), the financial heart of countless innovative tech startups.

Nvidia has not been insulated from these troubled waters, having seen its share of major business challenges during the past few years:

- In 2019, Tesla turned away from its longstanding partnership with Nvidia, electing to develop its own D1 chip hardware for use in its Doju supercomputer and autonomous driving program.

- During the pandemic, demand by bitcoin miners drove up the price of its graphics cards, infuriating PC gamers. Then the bitcoin mining market collapsed, leading to a sudden glut of Nvidia GFX cards.

- The US Federal Government banned Nvidia and other American companies from selling AI chips to China, cutting Nvidia off from a lucrative, booming Chinese automotive market.

- Major investments in Nvidia’s Omniverse (their “metaverse” initiative) haven’t lived up to the hype as mainstream users continue to balk at adopting expensive and often cumbersome virtual reality headsets.

Silicon Valley Slump? AI to the Rescue!

If Silicon Valley is famous for anything, it’s “The Pivot.”

Business plan not panning out? It’s time to pivot and change direction.

Indeed, in recent weeks, there has been a feeding frenzy around artificial intelligence (reminiscent of the dot-com boom of the turn of the century) – fueled by the breathtaking capabilities of OpenAI’s large language model-powered ChatGPT software.

In response, just about every Silicon Valley tech company is pivoting its business plan to take advantage of this new AI, with many non-tech companies (viz Coca-Cola) also seeking to bolt on a “generative AI” component to their business storyline.

This is good news for Nvidia Co-founder and CEO Jensen Huang, who has been championing long-term strategic investments in artificial intelligence and is now riding a wave of enthusiasm for the company (and its stock, which has more than doubled in price since October 2022).

What Caught our Eye at the Nvidia GTC Developer Conference

NVIDIA Co-Founder and CEO Jensen Huang Delivers the Keynote Address to the Nvidia GTC 2023 Developer Conference

In our view, three broad major initiatives stood out at the Nvidia GTC Developer Conference.

The Rise of Digital Twins, including Autonomous Vehicles

The first initiative is the rise of Digital Twins, which is poised to be the breakout application for Nvidia’s Omniverse investment initiatives.

Here, Nvidia is striking directly at the lucrative enterprise market rather than taking on the indirect competitors in the gaming entertainment space (such as Fortnight/Unreal and Roblox).

Huang promoted the idea of using Nvidia Omiverse technology to create digital twins of factories, such as a new BMW automobile manufacturing facility.

Robotic assembly and material handling operations can be simulated and tested before entering into service using the Nvidia Isaac SIM software application. Here, the lighthouse customer is none other than Amazon, which used the new software stack to develop a fully-autonomous warehouse robot.

In a sign of how serious Nvidia is taking the enterprise market, it announced several new cloud initiatives, starting with the Omniverse Cloud, a partnership with Microsoft (powered by the Azure Cloud), which Huang said will provide access using Nvidia technology to Microsoft’s huge userbase.

Perhaps controversially, we include autonomous driving in the Digital Twins category, thanks to its inherent ability to extract complex 3D models of real-world physical environments – to make real-time, life-or-death decisions.

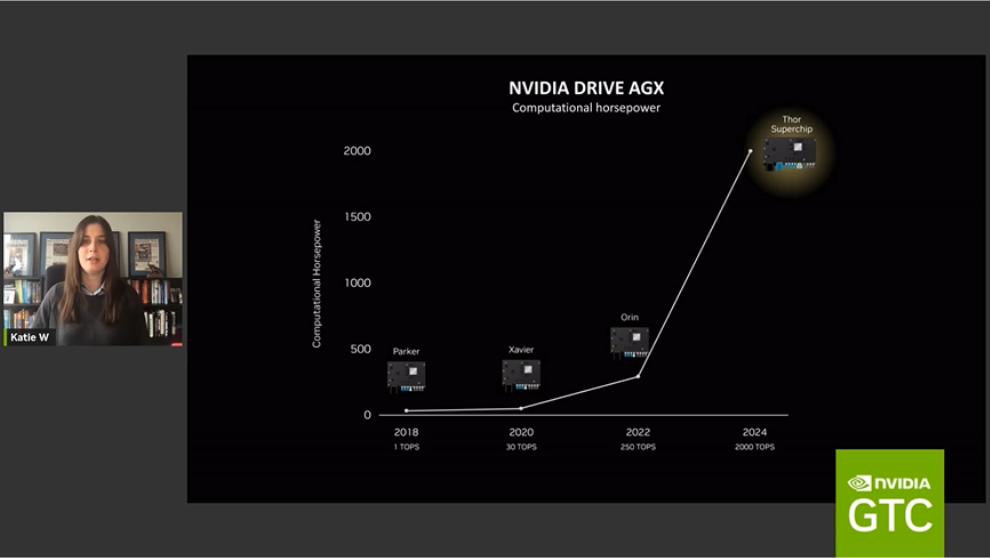

Katie Washabaugh, Nvidia Automotive Product Marketing Manager, conducted a seminar on Nvidia’s advances in autonomous driving, pointing out that Nvidia’s “Centralized AI Compute” platform (the brains behind Nvidia’s vision of a self-driving car) needs to process 32 terabytes of data in every 6 hours of driving – processing the information at 254 trillion floating point operations per second.

The newest generation Nvidia Drive Thor “Superchip” represents a 5 order of magnitude increase in processing power compared to the first generation and is now capable of processing 2000 trillion teraflops.

AI Generative Content and Large Language Models

Since OpenAI introduced their revolutionary ChatGPT 3.5 application last month, AI Generative Content is on the lips of everyone in the software industry (and beyond).

This past week, OpenAI introduced the even more capable ChatGPT 4 large language model, which adds visual image creation. Hoping not to fade into the background, today while Nvidia CEO Jensen Huang was presenting his keynote, Google formally launched its ChatCPT competitor, dubbed “Bard” in what we assume is a Shakespeare reference.

Nvidia wants to be at the heart of the hardware/software stack that powers the large language models responsible for generative AI text (a la Chat GPT) as well as generative AI image technology powered by stable diffusion.

According to some analysts, Nvidia may have as much as 95% market share when it comes to the high-performance AI specialty chips used by leading AI developers in the Generative Content space, such as OpenAI and Stability AI.

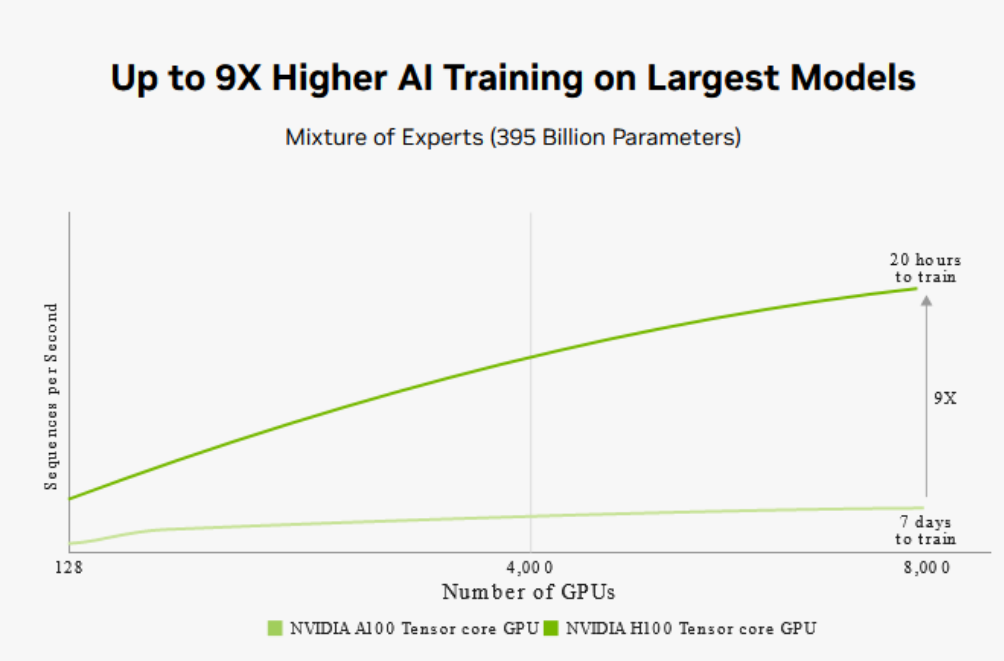

Nvidia’s current generation A100 Tensor Core GPU artificial intelligence chips cost $10,000 each, and Stability AI has reportedly grown its inventory of A100 chips from just 32 to over 5,400.

Today, during his keynote address, Nvidia CEO Jensen Huang presented the product roadmap for the next generation chip, the H100 Tensor Core GPU, which Nvidia claims offers an order of magnitude leap in AI processing power.

High Investment Cost Required to Develop and Deploy Interactive Large Language Models Remains a Barrier to Entry

When it comes to ruling the AI-powered universe, just how far ahead are hardware companies, such as Nvidia, and the software companies (like Open AI and Stable AI) with their billion-dollar-plus valuations?

Will it be possible for other competitors to catch up?

Certainly, the major players, such as Google and META, and even Tesla (with its custom Dojo computer) have the financial resources to recruit the top-level AI talent and fund the data-intensive tech centers required to create viable large language models that are the backbone of Generative AI systems.

(The companies also have access to the critical training data: Google via its search engine and online translation tools, META via its Facebook and Instagram social media apps, and Tesla via its 24×7 collection of driving information data from Tesla owners.)

Thanks to Moore’s law, the power of AI computing technology may continue to rise exponentially, but will the cost of generative AI chips, such as those announced at the Nvidia GTC Developer Conference, ever come down in price to make them accessible to ordinary academic researchers and startup ventures – or will they remain in the hands of well-funded Silicon Valley Unicorns?

FOSS says, “Hold my Beer” – maybe.

Given that Microsoft reportedly spent several hundreds of millions of dollars (including the purchase of tens of thousands of Nvidia A100 chips) to create its version of the Bing AI bot (based on an investment partnership with OpenAI, the creator of ChatGPT), is there any hope for mere mortals to get in the game?

Fortunately, there has always been a small but vocal contingent of open-source software advocates who can make it happen.

A “free and open source software” (FOSS) project at Stanford University offers hope for independents, startups, and academic researchers wanting to gain access to the class of powerful large language models required to create Generative AI software projects.

We say “hope” – because the Stanford project, dubbed Alpaca (a play on the name of one of its underlying language models, LLaMA 7B, from Meta), lasted just about a week before the project was pulled down, having succumbed to a combination of types of errors that plagued the Bing AI chatbot (including making up information, known as ‘hallucinations’ in the AI world) as well as IP issues due to it using OpenAI’s text-davinci-003. (Despite the name, OpenAI is a proprietary system.)

Despite its short life, the Stanford project did demonstrate that it was possible to program a large language model with modest hardware, estimated at well below $1,000. If you are interested in having a look, the source code is still up on GitHub.

Whether Proprietary or FOSS, AI Implementations have many Challenges ahead on the Road to Artificial General Intelligence (AGI)

The short-lived Stanford project does highlight some of the major challenges facing the industry, from creating AI safety protocols to preventing (or controlling) hallucination effects, as well as a broad spectrum of untested intellectual property rights.

Despite these challenges, we are excited for the future of AI as software researchers pursue the goal of an Artificial General Intelligence (AGI) solution.

Formaspace is Your Partner for Tech Lab Solutions

If you can imagine it, we can build it, here at our Formaspace factory headquarters in Austin, Texas.

Contact your Formaspace Design Consultant today to see how we can work together to make your development labs and data centers more ergonomic, productive, and efficient.